An Autonomous Killer Drone “Hunted Down” Humans On Its Own Accord For First Time

Published on June 3, 2021 at 9:15 AM by Mc Noel Kasinja

An autonomous drone has reportedly chased down and attacked people on its own accord.

It goes down as the first time artificial intelligence (AI) has attacked human beings in such a way and it is unclear whether the drone may have killed someone during the attack which took place in Libya in March last year.

A UN Security Council report states that on March 27, 2020, Libyan Prime Minister Fayez al-Sarraj launched “Operation PEACE STORM”, in which unmanned combat aerial vehicles (UCAV) were used against Haftar Affiliated Forces. This is not the first time drones have been used in battle, but it is the first time that they operated without human input, after the initial attack with human input had taken place.

“Logistics convoys and retreating HAF were subsequently hunted down and remotely engaged by the unmanned combat aerial vehicles or the lethal autonomous weapons systems such as the STM Kargu-2 (see annex 30) and other loitering munitions,” the report says.

“The lethal autonomous weapons systems were programmed to attack targets without requiring data connectivity between the operator and the munition: in effect, a true ‘fire, forget and find’ capability.”

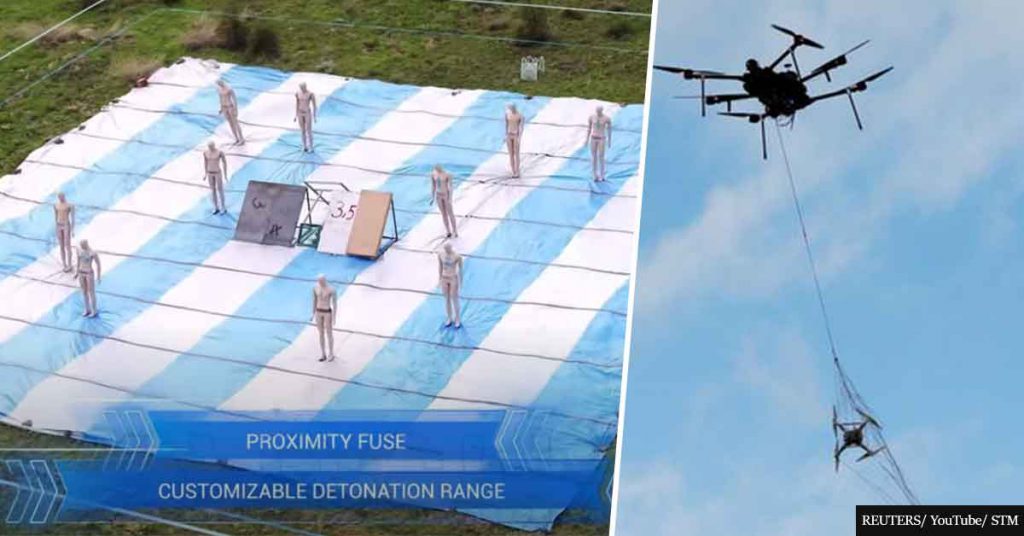

The KARGU is a rotary-wing attack drone made for anti-terrorist missions and asymmetric warfare.

According to its creators it “can be effectively used against static or moving targets through its indigenous and real-time image processing capabilities and machine learning algorithms embedded on the platform.” A video showcasing the drone’s capabilities shows it targeting and detonating an explosive charge on mannequins in a field.

Needless to say, these drones also proved highly effective against human beings.

“Units were neither trained nor motivated to defend against the effective use of this new technology and usually retreated in disarray,” the report states. “Once in retreat, they were subject to continual harassment from the unmanned combat aerial vehicles and lethal autonomous weapons systems, which were proving to be a highly effective combination.”

The report did not go into detail when it came to deaths the attack may have caused, even though they said that the machines were “highly effective” in helping to inflict “significant casualties” on the enemy’s surface-to-air missile systems. It is likely that this is the first time a human has been attacked or killed by a drone operated by artificial intelligence.

The attack, whether deadly or not, will not be cheered by activists against the use of “killer robots“.

“There are serious doubts that fully autonomous weapons would be capable of meeting international humanitarian law standards, including the rules of distinction, proportionality, and military necessity, while they would threaten the fundamental right to life and principle of human dignity,” the Human Rights Watch says. “Human Rights Watch calls for a preemptive ban on the development, production, and use of fully autonomous weapons.”

There is a big debate currently ranging on whether we should put too much trust in AI as the negative consequences could prove to be catastrophic to humanity.